Elon Musk: AI Potentially More Dangerous Than Nukes

Artificial Intelligence takes over the world and exterminates or condemns the remnants of humanity to living in subterranean slums. Sound familiar? It’s just the premise for several Sci-Fi movies out there. Or is it? Over the weekend Tesla CEO, Elon Musk tweeted: “We need to be super careful with AI. Potentially more dangerous than nukes.”

Fact or Fiction?

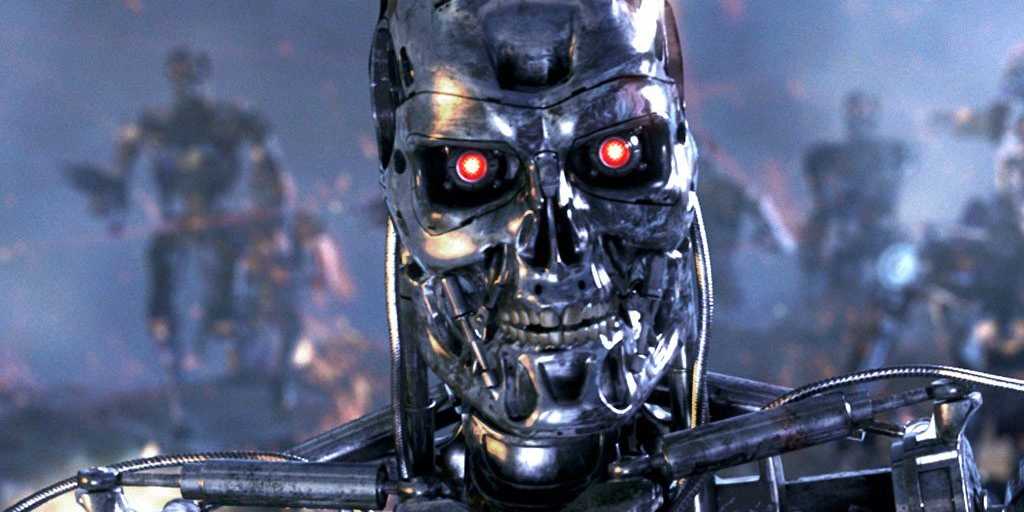

We have always been mesmerized by the possibility of a future involving artificial intelligence. Battlestar Galactica, the Terminator, the Matrix, and most recently Transcendence are all just some of the TVshows/films that attempt to paint a picture of what the future might look like if a super intelligence decided to take over. While this is all fiction, it could become a reality – just not anytime soon. According to Bruno Olshausen, a professor at Berkeley, our research into how the human brain works is still in its infancy.

That’s not to say AI programs should be left unchecked, as evidenced by Musk’s backing of Vicarious. An organization dedicated to developing and providing services based on artificial intelligence. In an interview with CNBC, Musk explains that his interest in Vicarious is to keep an eye on the developing field.

Future Potential

It can be argued that human potential will skyrocket with the help of AI. Developing technology that can heal our planet, cure sickness and disease, and propel us into the stars are just some of the hopeful possibilities that might be accomplished with the advent of a true AI. But along with the hypothetical benefits, come the possible doomsday scenarios that we have all grown accustomed to seeing on the big screen. Which side of the two extremes will we land on? Only time will tell.

Another tweet by Musk on the same day: “Hope we’re not just the biological boot loader for digital superintelligence. Unfortunately that is increasingly probable.” A very disturbing, but well warranted thought. We live in an age where technology moves by leaps and bounds each year. It is quite possible that we could see a doomsday-like event before we reach the capability of understanding 50% of the human brain. A scenario where a “half-baked” AI becomes self aware, and begins making very bad decisions for the rest of us, isn’t out of the realm of possibility 20 or 30 years from now.

Ever Vigilant

In order to prevent potential disasters, it is in the interest of everyone to keep a watchful eye on the development of AI. The potential benefits could be bountiful, but a wrong turn somewhere and the potential for disaster could be equally so. This field is both exciting and terrifying. Monitoring its progress is paramount, which is why Elon Musk’s stance on this should be one we all share.

The question that remains is how difficult will it be to monitor AI? It won’t necessarily be something tangible, and if technology continues moving at the rate that it is, it becomes a troublesome hurdle to jump. We will all just have to keep a look out for Cylons. Or time dispacement spheres from the future.